Free Chat Gpt Lessons Discovered From Google

페이지 정보

작성자 Virgil 작성일 25-01-24 10:16 조회 10 댓글 0본문

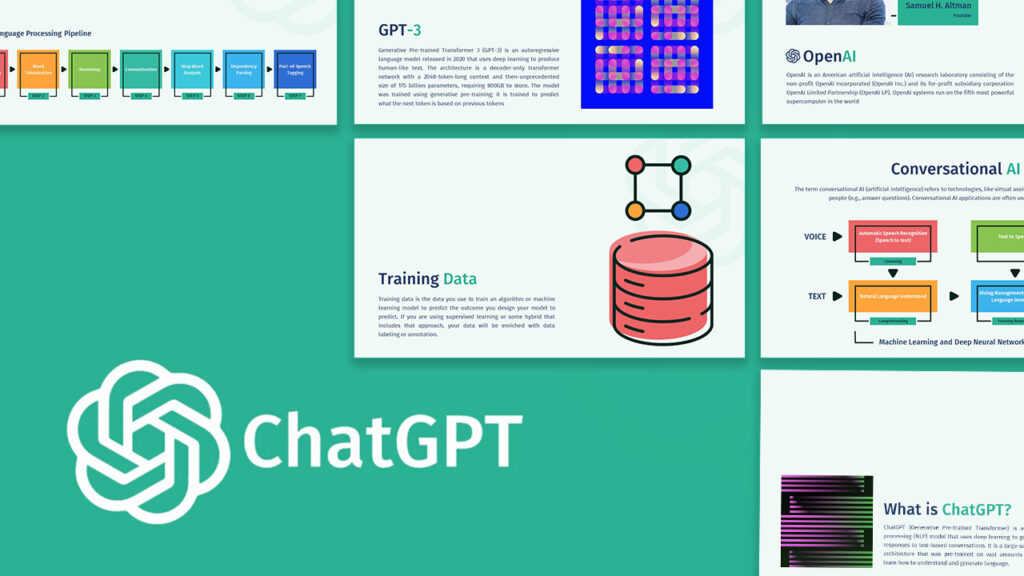

A workshop model of this text is out there on YouTube. Gumroad and Youtube Kids use this model. What language (jargon, technical phrases) do they use? Free Chat GPT’s superior pure language processing capabilities allow it to grasp advanced queries and provide correct and relevant data. Deterministic computing continues to be the dominant sort, as the vast majority of humanity is not even conscious of the capabilities of probabilistic computing, aka Artificial Intelligence. The AI writing capabilities of GPT-3 are unparalleled, making it a sport-changer in the field of content material creation. Its ChatGPT discipline acts like an AI assistant guiding users by means of each step of the form submission course of. Value(discipline, value): Sets the worth of a area on the GlideRecord. This may occur even if you happen to attempt to set the context yourself explicitly. Whether they are your non-public files or the interior recordsdata of the corporate you're employed for, these files couldn't have been part of any commercial model's training set because they are inaccessible on the open internet. And except you do not learn about Retrieval Augmented Generation (RAG), you would possibly assume that the time of non-public and non-public firm assistants continues to be far away.

A workshop model of this text is out there on YouTube. Gumroad and Youtube Kids use this model. What language (jargon, technical phrases) do they use? Free Chat GPT’s superior pure language processing capabilities allow it to grasp advanced queries and provide correct and relevant data. Deterministic computing continues to be the dominant sort, as the vast majority of humanity is not even conscious of the capabilities of probabilistic computing, aka Artificial Intelligence. The AI writing capabilities of GPT-3 are unparalleled, making it a sport-changer in the field of content material creation. Its ChatGPT discipline acts like an AI assistant guiding users by means of each step of the form submission course of. Value(discipline, value): Sets the worth of a area on the GlideRecord. This may occur even if you happen to attempt to set the context yourself explicitly. Whether they are your non-public files or the interior recordsdata of the corporate you're employed for, these files couldn't have been part of any commercial model's training set because they are inaccessible on the open internet. And except you do not learn about Retrieval Augmented Generation (RAG), you would possibly assume that the time of non-public and non-public firm assistants continues to be far away.

Imagine that you've a bunch of inner software program documentation, try chat financial statements, authorized documents, design tips, and far more in your organization that staff continuously use. A fine-tuned Hungarian GPT-four mannequin would most likely handle Hungarian questions significantly better than the base model. This model will perform much better in answering Python-associated questions than the Llama foundation mannequin. These are the apps that may survive the following OpenAI release or the emergence of a better mannequin. Although there are certainly apps which might be really simply a greater frontend before the OpenAI API, I wish to level out a distinct form. And instead of limiting the consumer to a small variety of queries, among the apps would truncate responses and provides customers solely a snippet till they started a subscription. As anticipated, employing the smaller chunk measurement whereas retrieving a larger variety of documents resulted in reaching the highest levels of both Context Relevance and Chunk Relevance. The significant variations in Context Relevance recommend that certain questions may necessitate retrieving extra paperwork than others. They show you the way effective leaders use questions to encourage participation and teamwork, foster inventive pondering, empower others, create relationships with customers, and clear up problems. LLMs can iteratively work with users and ask them inquiries to develop their specifications, and also can fill in underspecified details utilizing frequent sense.

Imagine that you've a bunch of inner software program documentation, try chat financial statements, authorized documents, design tips, and far more in your organization that staff continuously use. A fine-tuned Hungarian GPT-four mannequin would most likely handle Hungarian questions significantly better than the base model. This model will perform much better in answering Python-associated questions than the Llama foundation mannequin. These are the apps that may survive the following OpenAI release or the emergence of a better mannequin. Although there are certainly apps which might be really simply a greater frontend before the OpenAI API, I wish to level out a distinct form. And instead of limiting the consumer to a small variety of queries, among the apps would truncate responses and provides customers solely a snippet till they started a subscription. As anticipated, employing the smaller chunk measurement whereas retrieving a larger variety of documents resulted in reaching the highest levels of both Context Relevance and Chunk Relevance. The significant variations in Context Relevance recommend that certain questions may necessitate retrieving extra paperwork than others. They show you the way effective leaders use questions to encourage participation and teamwork, foster inventive pondering, empower others, create relationships with customers, and clear up problems. LLMs can iteratively work with users and ask them inquiries to develop their specifications, and also can fill in underspecified details utilizing frequent sense.

Since it is an extremely rare language (only official in Hungary), the sources on the internet that can be utilized for training are minimal compared to English. Hallucinations are frequent, calculations are incorrect, and running inference on problems that don't require AI simply because it is the buzzword these days is expensive compared to operating deterministic algorithms. Implementationally these calculations can be considerably organized "by layer" into highly parallel array operations that can conveniently be done on GPUs. Then, when a consumer asks something, related sentences from the embedded paperwork could be retrieved with the assistance of the same embedding model that was used to embed them. In the subsequent step, these sentences should be injected into the mannequin's context, and voilà, trychatpgt you simply extended a foundation mannequin's knowledge with thousands of documents with out requiring a larger mannequin or effective-tuning. I will not go into the way to high quality-tune a mannequin, embed documents, or add instruments to the mannequin's hands as a result of each is a big sufficient topic to cowl in a separate post later. My first step was to add some instruments in its hand to fetch actual-time market info such because the actual value of stocks, dividends, properly-identified ratios, financial statements, analyst recommendations, and so on. I may implement this at no cost since the yfinance Python module is greater than enough for a simple function like mine.

Looks like we have now achieved an excellent hold on our chunking parameters but it's worth testing another embedding model to see if we will get higher outcomes. Therefore, our focus shall be on enhancing the RAG setup by adjusting the chunking parameters. When the model decides it's time to call a perform for a given job, it would return a specific message containing the function's name to call and its parameters. When the model has entry to more instruments, it could return a number of instrument calls, and your job is to call each perform and supply the solutions. Note that the mannequin by no means calls any perform. With fantastic-tuning, you'll be able to change the default style of the model to fit your needs higher. In fact, you can mix these in order for you. What I need to answer below is the why. Why do you want an alternate to ChatGPT? It could be beneficial to discover different embedding fashions or completely different retrieval methods to address this problem. In neither case did you've to alter your embedding logic since a different model handles that (an embedding model).

댓글목록 0

등록된 댓글이 없습니다.