The ten Key Elements In Free Gpt

페이지 정보

작성자 Mittie Rodrigue 작성일 25-01-24 22:07 조회 2 댓글 0본문

This week, MIT Technology Review editor in chief Mat Honan joins the show to chronicle the historical past of Slack because the software go well with turns 10 years old. House of Representatives, Jake Auchincloss, wasted no time utilizing this untested and nonetheless poorly understood expertise to deliver a speech on a bill supporting creation of a brand new synthetic intelligence heart. With the latest replace, when utilizing Quick Chat, now you can use the Attach Context action to attach context like files and image to your Copilot request. With Ma out of the public eye, they now hang on the words of entrepreneurs like Xiaomi’s Lei Jun and Qihoo 360’s Zhou Hongyi. As you can see, it just assumed and gave up a response of 38 phrases once we allowed it to go up to 50 words. It was not overridden as you'll be able to see from the response snapshot beneath. → For example, let's see an instance. → An instance of this would be an AI model designed to generate summaries of articles and find yourself producing a abstract that includes particulars not present in the unique article or even fabricates data fully. Data filtering: When you don't need every piece of data in your raw data, you possibly can filter out pointless data.

This week, MIT Technology Review editor in chief Mat Honan joins the show to chronicle the historical past of Slack because the software go well with turns 10 years old. House of Representatives, Jake Auchincloss, wasted no time utilizing this untested and nonetheless poorly understood expertise to deliver a speech on a bill supporting creation of a brand new synthetic intelligence heart. With the latest replace, when utilizing Quick Chat, now you can use the Attach Context action to attach context like files and image to your Copilot request. With Ma out of the public eye, they now hang on the words of entrepreneurs like Xiaomi’s Lei Jun and Qihoo 360’s Zhou Hongyi. As you can see, it just assumed and gave up a response of 38 phrases once we allowed it to go up to 50 words. It was not overridden as you'll be able to see from the response snapshot beneath. → For example, let's see an instance. → An instance of this would be an AI model designed to generate summaries of articles and find yourself producing a abstract that includes particulars not present in the unique article or even fabricates data fully. Data filtering: When you don't need every piece of data in your raw data, you possibly can filter out pointless data.

GANs are a particular type of community that utilizes two neural networks, a discriminator and a generator, to generate new knowledge that is just like the given dataset. They compared ChatGPT's performance to conventional machine learning fashions which are generally used for spam detection. GUVrOa4V8iE) and what folks share - 4o is a specialised mannequin, it can be good for processing giant prompts with plenty of enter and instructions and it will possibly show higher efficiency. Suppose, giving the same input and explicitly asking to not let it override in the next two prompts. It's best to know you could mix a chain of thought prompting with zero-shot prompting by asking the mannequin to perform reasoning steps, which may often produce better output. → Let's see an example where you can combine it with few-shot prompting to get higher outcomes on extra complicated tasks that require reasoning before responding. The automation of repetitive tasks and the provision of speedy, correct info enhance overall effectivity and productivity. Instead, the chatbot responds with data primarily based on the training information in GPT-4 or GPT-4o.

GANs are a particular type of community that utilizes two neural networks, a discriminator and a generator, to generate new knowledge that is just like the given dataset. They compared ChatGPT's performance to conventional machine learning fashions which are generally used for spam detection. GUVrOa4V8iE) and what folks share - 4o is a specialised mannequin, it can be good for processing giant prompts with plenty of enter and instructions and it will possibly show higher efficiency. Suppose, giving the same input and explicitly asking to not let it override in the next two prompts. It's best to know you could mix a chain of thought prompting with zero-shot prompting by asking the mannequin to perform reasoning steps, which may often produce better output. → Let's see an example where you can combine it with few-shot prompting to get higher outcomes on extra complicated tasks that require reasoning before responding. The automation of repetitive tasks and the provision of speedy, correct info enhance overall effectivity and productivity. Instead, the chatbot responds with data primarily based on the training information in GPT-4 or GPT-4o.

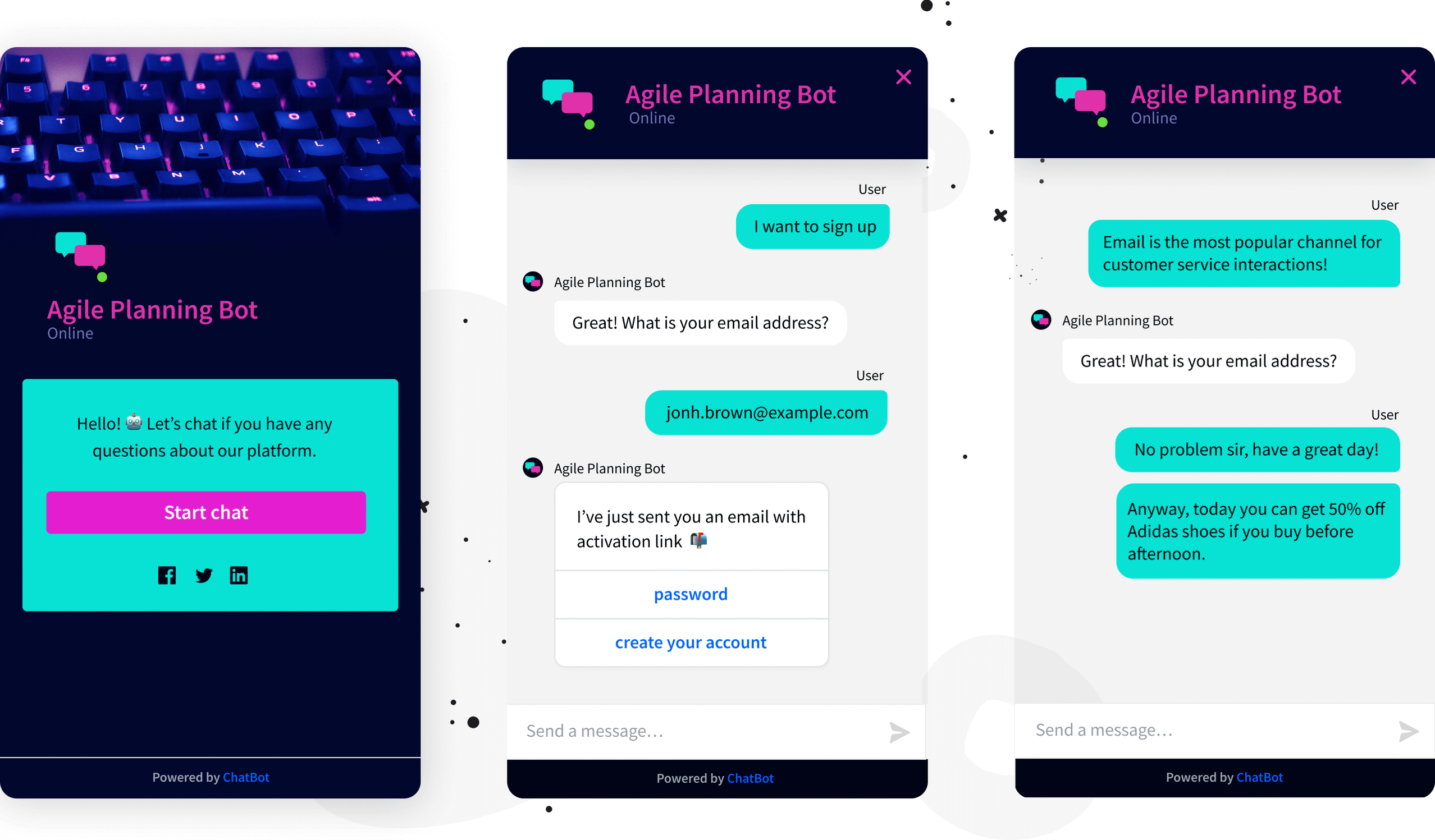

Generic large language models (LLMs) can't tackle points unique to you or your company's proprietary information as a result of they're educated on publicly available information, not your custom information. While the LLMs are great, chat gpt free they still fall short on extra advanced duties when using the zero-shot (discussed in the 7th point). This approach yields impressive outcomes for mathematical duties that LLMs in any other case typically remedy incorrectly. Using the examples offered, the model learns a specific behavior and gets better at finishing up similar tasks. Identifying particular pain points where ChatGPT can provide important value is crucial. free chatgpt by OpenAI is the most effectively-identified AI chatbot at the moment obtainable. If you’ve used ChatGPT or comparable services, you understand it’s a flexible chatbot that might help with tasks like writing emails, creating advertising and marketing methods, and debugging code. More like giving profitable examples of completing duties and then asking the mannequin to perform the duty. AI prompting can help direct a large language model to execute tasks based on different inputs.

That is the smallest type of CoT prompting, zero-shot CoT, where you literally ask the model to assume step-by-step. Chain-of-thought (CoT) prompting encourages the mannequin to interrupt down complicated reasoning into a sequence of intermediate steps, resulting in a effectively-structured closing output. This is the response of a perfect end result after we supplied the reasoning step. Ask QX, nevertheless, takes it a step additional with its potential to combine with inventive ventures. However, it falls short when handling questions particular to certain domains or your company’s internal data base. Constraint-based mostly prompting includes adding constraints or circumstances to your prompts, helping the language model concentrate on particular features or requirements when generating a response. Few-shot prompting is a immediate engineering method that entails exhibiting the AI a number of examples (or photographs) of the specified results. While frequent human review of LLM responses and trial-and-error prompt engineering can assist you to detect and handle hallucinations in your application, this strategy is extremely time-consuming and difficult to scale as your utility grows. Prompt engineering is the practice of creating prompts that produce clear and useful responses from AI instruments. The Protective MBR protects GPT disks from previously released MBR disk tools similar to Microsoft MS-DOS FDISK or Microsoft Windows NT Disk Administrator.

If you adored this article so you would like to collect more info concerning try chat gpt i implore you to visit the web site.

댓글목록 0

등록된 댓글이 없습니다.